Various Ways to Protect a Directory

There are many ways a directory can be protected. The method selected depends on why the directory needs protection.

Generally, protecting a directory means hiding the content of a directory from:

- Legitimate search engines.

- Specific spiders or robots.

- Non-password access.

- Non-cookie access.

- Access unless the URL of a document is known.

- All internet traffic.

But it can also mean hiding the directory itself.

Following are various methods to protect directory contents and a method to hide a directory itself.

The robots.txt File for Legitimate Search Engines

Hiding content of a directory from legitimate search engines can be done with a robots.txt file. It tells search engine spiders/robots/crawlers not to index certain directories.

Here is an example that tells all spiders not to index directory "asubdirectory":

User-agent: * Disallow: /asubdirectory/

Put the robots.txt file into the document root directory of your domain. (The document root directory is the directory where the file of your domain's main/index page is located.)

A lot could be said about the robots.txt file. If you would like more information, see the Robots.txt file page or The Web Robots Pages.

The robots.txt file isn't suitable for hiding directories from internet traffic. The directory is as accessible as any other public directory. What it is good for is keeping the content of the directories from being indexed by reputable search engines.

Rogue spiders may scan the robots.txt file to find the very content you are trying to keep out of search indexes.

The robots.txt method can be used when the only protection you need is to keep the content out of legitimate search engine indexes.

Blocking Specific Spiders or Robots

The user-agent string of a spider or robot can be used to block it from a directory (or the entire website).

Find the user-agent string in your server logs. Also, doing a search for "bad bot list" can yield websites with lists of bad bots, which often include the bots' user-agent string..

When you have the user-agent string of a bad bot, the .htaccess file can be used to block it.

If you wish to block the bot from your entire site, put the blocking code into the .htaccess file located in your document root directory. Otherwise, put the .htaccess file into the directory you want the bot to stay out of.

Here is example blocking code for the .htaccess file. Customization notes follow.

SetEnvIf User-Agent SiteSucker BadBot=1 SetEnvIf User-Agent WebReaper BadBot=1 Order allow,deny Allow from all Deny from env=BadBot

The above example blocks two bots, one with SiteSucker in its user-agent string and the other with WebReaper in its user-agent string.

Replace those with a unique string of characters found in the user-agent string of the bot you want to block. Generally, the unique part would be the bot's name. It might also be a URL if the user-agent string contains one.

As many bots as you wish can be added, one bot per line.

Regular expression patterns can be used when specifying the unique string of characters. It's a huge subject, regular expressions, and outside the scope of this article. A search for "regular expression tutorial" will yield much information.

The BadBot in the example blocking code is a variable that's set to the digit 1 if the bot's user-agent string contains the unique string of characters you specified.

The last three lines of the example blocking code are left as is. The server will deny access if the value of BadBot is the digit 1.

The spider/robot blocking method can be used when there is one or more specific bots that must be kept out of your directory or out of your entire website.

Password-required Access Only

Your hosting company may provide cPanel or other interface where you can set up password-protection for a directory. If yes, use that.

Otherwise, the How to Password Protect a Directory on Your Website page has how-to information.

This method is specifically for requiring a username and a password for accessing a directory and its subdirectories.

Cookie-required Access Only

To require a specific cookie for access to a directory, see this how-to article.

This is the technique to use when you wish to require a specific cookie to allow access to a directory and its subdirectories.

Access Only When the URL of a Document Is Known

In some instances, a directory file listing can be delivered to a browser or bot. The listing is a list of file names the directory contains.

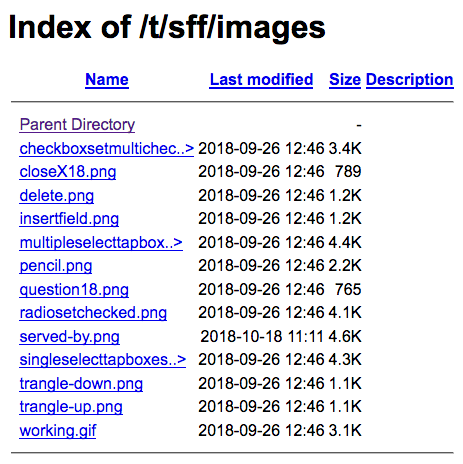

Here is a screenshot illustration.

To get the list, a browser (or bot) requests the directory, minus a file name. http://example.com/directory/ is an example. The request is for the directory, not for a file in the directory.

If an index page is in that directory, the server will deliver the index page, not a file listing.

When there is no index page, then whether or not the server responds with a directory file listing depends on how the server is configured.

Generally, hosting companies set up new accounts with directory file listing turned off. But it sometimes can be turned on via the site's dashboard. Or, it can be turned on with the line of code presented further on in this section.

But let's first learn how to turn it off.

To turn file listing off for a directory, put this line into the .htaccess file of the directory you want to protect.

Options -Indexes

That causes the server to respond with a 403 Forbidden status when a browser or bot asks for the directory and no index file is present.

No browser or bot can call up the directory and get a list. But they can access the page or other document if they already know what the URL is.

To turn directory file listing off for an entire website, put the above line into the .htaccess file located in the domain's document root directory.

If you do turn it off for the entire website, it can be turned on for a specific subdirectory (and all its subdirectories) with this in the subdirectory's .htaccess file.

Options +Indexes

That turns directory file listing on. A browser or bot requesting the directory will be served with a list of files in that directory.

The "+" character in front of the word "Indexes" turns directory file listing on. The "-" character turns it off.

Turning directory file listing off generally is sufficient protection when you want to link to certain individual files in the directory but don't want to expose the entire list of files the directory contains.

Block All Internet Traffic

To block all internet traffic from a directory, put this line into the directory's .htaccess file.

deny from all

Software on your server can still access the directory using direct file access — but not by using HTTP or HTTPS, or by using Ajax.

This is the most effective blocker of internet traffic in the list of directory content protection methods this article presents. It is rather draconian — no internet traffic at all can get in there, for any reason. All will receive a 403 Forbidden error.

But, as stated, it does allow scripts on your server access files directly.

Hide the Directory Itself

To hide a directory, it must be a subdirectory of a subdirectory.

Pretend the second subdirectory is actually a file.

Now, implement one of the directory content protection methods presented in this article.

The first subdirectory has directory protection implemented, the second subdirectory, the one within the protected subdirectory, has all the protection a file would have.

This method can be used to hide the name of a subdirectory when the name may provide clues to crackers or when there are other reasons for keeping the directory name secret.

The Various Ways

Those various ways to protect directory contents, and a directory itself, should be sufficient to meet any of your requirements. Select the method that provides the protection you need.

If none fit your requirements, there are other ways. A search can reveal many.

Or, if you would like me to implement something for you, use our contact form and let us know what your requirements are.

(This article first appeared with an issue of the Possibilities newsletter.)

Will Bontrager